Thormulator

Thor Madsen, June 2024

As the creator of Thormulator, I am excited to introduce this innovative tool for improvisation. Thormulator enhances solo performances with real-time, interactive musical responses. By interpreting your MIDI and audio inputs, it dynamically modifies sound, alters structures, and generates new audio, fostering a playful musical dialogue.

The essence of Thormulator lies in its ability to create an intuitive feedback loop between the musician and the software. Imagine playing a sequence and hearing an unexpected yet musically coherent response that pushes your performance in a new direction.

With decision logic ensuring musically relevant responses, Thormulator opens up a world of creative potential, pushing the boundaries of traditional music-making and inviting the performer to explore new musical ideas.

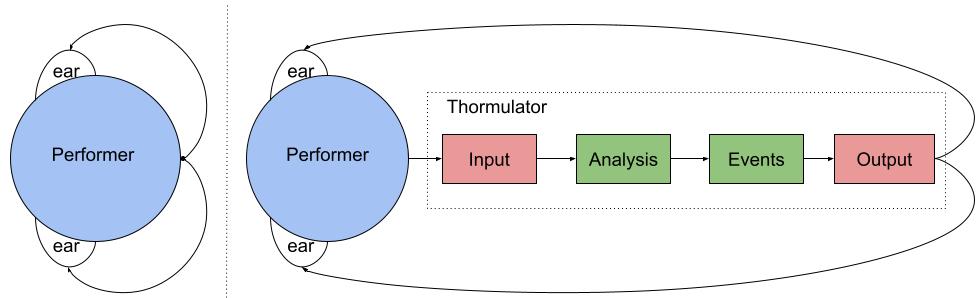

Showcasing the raw interaction between the performer and Thormulator.

Thormulator: A Real-Time Musical Partner for the Adventurous Performer

Thor Madsen, Copenhagen, June 18, 2024

Introduction

Brief Overview

In the ever-evolving landscape of music technology, Thormulator is an innovative tool designed to augment the musical palette of a solo performer through real-time transformations and interactive responses. This article explores Thormulator’s features, design, architectural framework, and future potential.

Thormulator is a dynamic, real-time musical collaborator that analyzes both MIDI data and audio signals, interprets musical gestures – specific features of the performer’s playing, such as dynamics, timing, pitch, and articulation – and responds by adjusting sound, altering structure, or generating new audio. It adapts to various musical contexts through sophisticated awareness logic and decision-making algorithms, ensuring musically appropriate and contextually relevant responses.

A significant driving force behind Thormulator’s development has been my curiosity about the intricate relationship between performer and machine, particularly how it affects the sense of mental ownership in performance. This project investigates how technology can enhance creative possibilities, establishing a responsive feedback loop that inspires new ways of musical interaction. By generating musical structures from improvised input, Thormulator facilitates a form of instant composition, enabling the production of unique and original music.

Initial Goals

I began work on my artistic research project Performance Machine in April of 2021. The outcome of the research is the proprietary interactive performance app Thormulator. The project was initially based on two main ideas:

Firstly, I wanted to create an app I could improvise with—an app which would feed me back meaningful musical responses in direct reaction to my playing, potentially creating a new type of feedback loop.

Secondly, I was imagining ways of playing into a sound, i.e., shaping the sound of other instruments with my playing in the same way you can shape a bag of rice with your hands.

The aim was not human realism or any type of objectiveness and did not involve machine learning for both technical and aesthetic reasons.

One can summarize my goal as designing a system which:

- Follows the performer in real time

- Has a sufficient number of responses to create a wide range of possible outputs

- Responds in a way that feels meaningful to the performer

- Allows the performer the maximum amount of musical freedom and range of expression

- Works without the need to use external controllers

In using such a system, I wanted to see if it is possible to establish a feedback loop between performer and machine which can inspire a new way of interacting with performance software, possibly a new type of music.

In the single-player feedback loop, musical responses are generated as a result of an impulse in the performer. The performer simultaneously creates responses and listens to these responses, the perception of which informs the next impulse along with internalized knowledge, muscle memory, stored recollections of previous performances, etc. On the right side, the feedback loop is extended with Thormulator, which by applying a set of rules to the analysis of the input from the performer adds new musical responses like chords, drones, beats, and basslines, and modulates previously added responses as well as the audio input from the performer. This feedback loop has similarities with that of a group of people playing together but is fundamentally different in that ‘outside’ responses are reflections of the performer’s ‘inside’ responses through the prism of the design and rules of the system.

I embraced the idea of surrendering control as so eloquently put by Brian Eno:

“Control and surrender have to be kept in balance. That’s what surfers do – take control of the situation, then be carried, then take control. In the last few thousand years, we’ve become incredibly adept technically. We’ve treasured the controlling part of ourselves and neglected the surrendering part…I want to rethink surrender as an active verb…It’s not just you being escapist; it’s an active choice.”

Brian Eno interviewed by Stuart Jeffries, The Guardian 2010.

The aim has been to create a system where the performer is somewhere in between playing to provoke specific responses (control) and reacting to responses without paying attention to how they were initiated (surrendering control).

Background and Inspirations

Historical Context and Inspirations

Throughout history, musicians have blended creativity with technology. Pioneers like Laurie Anderson and contemporary artists like Björk, Alva Noto, and Ryoji Ikeda have used custom instruments and software to push the boundaries of music and technology in live performances.

While working on Thormulator, especially when attempting to describe it in words, it has become increasingly clear to me how the project touches almost all aspects of my musical life—how I experience music as a listener and practitioner, my views on music theory, how I teach, how I interact with other musicians, and in general what you could call my whole musical belief system. This belief system is rooted in personal experience and studies of various kinds.

I had the distinct privilege of playing with conductor Butch Morris over a stretch of 10 years both in New York and all over Europe. Butch invented Conduction, a language of hand and baton gestures allowing him to improvise with an orchestra of any size, kind, or musical orientation. Interestingly, no matter who he conducted—a symphony orchestra, the Nublu Orchestra with 10 of New York’s finest jazz musicians, or a group of poets—it always sounded like him. His language was all about structure, leaving it up to the musicians to interpret and improvise within the directions given. Butch created both structured improvisation and improvised structures while allowing each musician to sing in his or her own voice. This concept has been hugely influential on my thought process.

Playing with Butch required the musician to perform a sort of real-time sonification of his hand signals with a great deal of personal freedom and responsibility. It was not enough to mindlessly execute; if you did not put your whole musicality into it, the music suffered, and he got real mad. Butch invented a new language in the search for new music and new meanings. I am trying to do something similar—the guitar is my baton and the band is a virtual one.

The Long Lead-Up

I was one of the first people to bring a laptop on stage when the first-generation Apple MacBook Titanium came out in 2001, and I was even mocked for it in a review in some LA newspaper, something along the lines of ‘is he checking his email on stage…?’! I experimented with many different setups using various trigger systems and hands-on controls to be able to improvise with the laptop in a live performance. The pinnacle of these attempts came during my years performing and recording with Wazzabi, my band with drummer Anders Hentze. I also played in bands where I had to play to a back track over which I had no control.

An interesting aspect of these experiences is what I will call the mental ownership of the performance. In my experience, playing with prefixed backtracks has the tendency of lowering the mental ownership, creating a certain detachment from the performance, making me feel more like a cameo than a supporting actor or a lead. I think this is one of the main reasons DJs keep touching their gear, even when it doesn’t produce any audible difference: to claim mental ownership and to project this both inwards and outwards. I felt I could somewhat reclaim ownership by adding trigger systems, using controllers with many knobs, restructuring loops on the fly by clicking buttons, etc. All this is hard to do while playing guitar at the same time. So what about using your feet? That works to some extent but after a while you start to feel like a clumsy tap dancer, and much focus on the music is being lost in the process.

Eventually, I stopped using laptops onstage around 2014 and have only done so rarely since. Thormulator is my attempt to reconnect with performance software in a new, more intuitive way.

The Process

Turning these somewhat abstract ideas into an actual piece of working software has been a monumental personal challenge as I knew almost nothing about programming when I started out. I had dabbled a bit with Max MSP—a software widely used in electronic music both for composing and performance. In Max MSP you have to drag virtual patch cables on the screen to connect objects, and it drove me nuts after a while, so I opted for the purely text-based language SuperCollider. In the first months, I was mentored over video sessions and email by the seasoned and accomplished Eli Fieldsteel, director at University of Illinois Experimental Music Studios. The project started as a small research project under The Danish National Academy of Music and has since been developed further at my own volition.

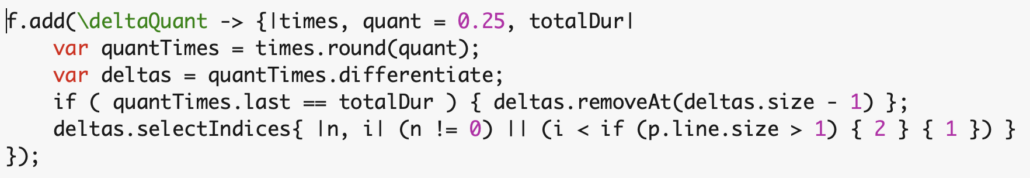

Developing the project involved both general programming and DSP (Digital Signal Processing) programming—a vast subject on its own. Both are handled by SuperCollider, which is a 3-in-one solution: A language (sclang), a code editor (scIDE), and an audio server (scsynth), which handles all the DSP operations like analyzing and generating sound.

By December 2021, I had a proof of concept—a somewhat buggy early prototype. Since then, I have rewritten the code numerous times, added more features, and refined every aspect of the app to the best of my abilities.

System Design and Capabilities

Design Choices

My overall goal has been to make the connection between performer and Thormulator as obvious as possible to the performer and audience alike. As a consequence, synth sounds are relatively standard and modulation is focused on easily perceptible parameters like amplitude, pitch, filter cutoff frequency, and panning. I feel these choices make it easier to follow the interaction compared to using more abstract sound design.

In cases where it seemed intuitively logical to connect a certain gesture to a certain response, I opted to do so. As an example, how fast the performer plays (average delta time) is proportional to the rate of modulation in many instances, which seems intuitive. Another example: how loud the performer is playing is proportional to how loud the responses are, which also seems sensible – ‘when I play soft, you play soft’. On the other end of the spectrum, playing crescendo and ritardando at the same time (which is surprisingly hard) can trigger a filter sweep, which is not intuitive at all and thought of more as an easter egg.

I have been quite deliberate and careful in the use of randomness. The general principle has been to use a modest amount of restricted randomness for smaller details – nothing happens out of the blue, but randomness can add a little salt and pepper to responses. Randomness is also used as a way of restricting output, for instance by muting some responses following a ‘biased coin toss’, e.g. 90% chance of heads (play), 10% chance of tails (mute).

Unique Capabilities of Thormulator

Thormulator functions as a dynamic, real-time musical collaborator. It converts audio signals into MIDI data, interprets musical gestures, and responds by adjusting sound, altering structure, or generating new audio. The system adapts to various musical contexts through sophisticated awareness logic and decision-making algorithms, ensuring musically appropriate and contextually relevant responses.

A distinctive aspect of Thormulator is its reliance on rule-based systems rather than AI or ML. This approach not only aligns with artistic values, offering a level of transparency and predictability akin to the charm of lo-fi aesthetics and imperfect replicas, but also provides intuitive control over the musical interaction. The transparency of rule-based systems allows both performers and audiences to follow the interaction more easily, enhancing the overall artistic experience. While Thormulator currently operates on these rule-based principles, future iterations may explore the integration of artificial intelligence or machine learning algorithms.

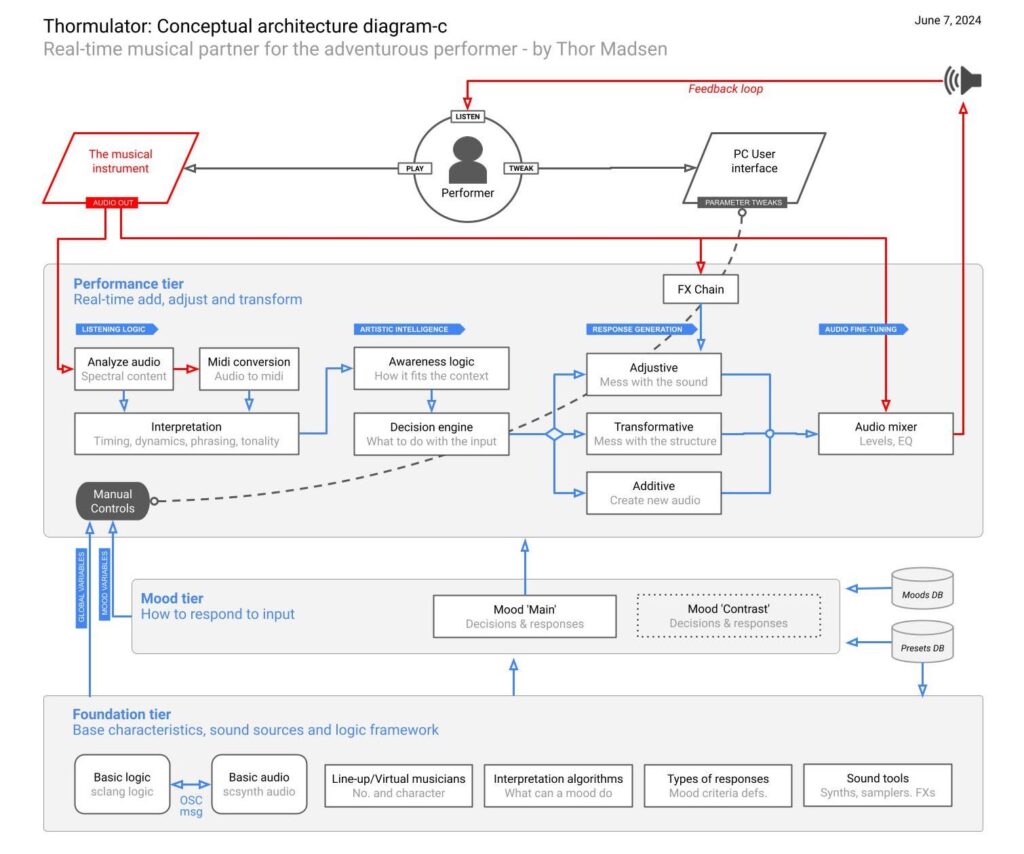

Conceptual Architecture

Performance Tier: This tier is responsible for real-time musical adjustments and transformations, including the generation of all responses. It integrates components from the Foundation Tier and the Mood Tier to determine how responses are generated based on the performer’s input. Key components include:

- Listening Logic: Analyze Audio (spectral content), MIDI Conversion (audio to MIDI), and Interpretation (timing, dynamics, phrasing, tonality).

- Artistic Intelligence: Awareness Logic (how it fits the context) and Decision Engine (what to do with the input).

- Response Generation: Adjustive (mess with the sound), Transformative (mess with the structure), and Additive (create new audio).

- Audio Fine-Tuning: Audio mixer (levels, EQ).

Mood Tier: This tier determines system responses based on predefined mood templates and a comprehensive mood database. It influences the response generation process in the Performance Tier by shaping the system’s behavior according to the mood selected. Thormulator’s “Moods” go beyond traditional musical genres or styles, representing complex, adaptive responses that can shift dynamically based on the performer’s input. A single mood can accommodate many musical genres or styles, responding fluidly to the nuances of what the performer plays. Think of moods as interactive frameworks. They enhance and complement the performer’s input by adjusting to the performer’s musical expressions in real time. As of now, only one mood (‘Main’) has been developed, with others to come. Key components include:

- Mood ‘Main’: Decisions and responses.

- Mood ‘Contrast’: Decisions and responses (future).

- Mood Database: Stores mood templates and presets.

Foundation Tier: This tier serves as the backbone of the system, establishing the core logic and sound sources. It encompasses a range of functionalities, including interpretation algorithms, virtual musicians, and diverse sound tools. Key components include:

- Core logic (sclang logic).

- Core audio (scsynth audio).

- Line-up/Virtual musicians (number and character).

- Interpretation algorithms (what can a mood do).

- Types of responses (mood criteria definitions).

- Sound tools (synths, samplers, FXs).

Throughout this project, I see Thormulator as a practical implementation of a broader concept: creating a system that dynamically responds to a performer’s input with musical structures. This version of Thormulator represents just one possible realization of this idea, demonstrating how technology can facilitate real-time interactive music performance.

Thormulator fits into several contemporary music technology categories, particularly Interactive Music Systems and Generative Music Systems, by dynamically responding to a performer’s input to create real-time musical structures. While it shares features with Adaptive Musical Instruments (AMIs) and Augmented Instruments, which enhance traditional instruments with technology, Thormulator operates as a standalone system that generates and manipulates music through performer interaction. Unlike AMIs, which focus on inclusivity, Thormulator is designed to expand the expressive range of a solo performer, emphasizing real-time composition and interaction. This unique blend of capabilities distinguishes Thormulator within the broader landscape of music technology.

Experience and Application

Personal Experience with Thormulator

Exploring the capabilities of the app has been an enriching experience, especially considering its current exclusivity to myself. One notable aspect is its effectiveness as an ear training tool. Despite the performer generating basslines, chords, and rhythms, it’s surprisingly easy to lose track of melodic and harmonic changes—a deliberate design choice that enhances the challenge.

Furthermore, the app offers opportunities for experimentation and growth. Basslines, chords, and rhythms can be transposed, rearranged, or mirrored in various ways, providing added complexity and depth to the musical experience. For instance, certain responses necessitate precision in rhythm, requiring the performer to hit specific subdivisions of the current tempo with accuracy.

An intriguing aspect is the app’s demand for rhythmic versatility. Some responses prompt the performer to play off-beat within the current subdivision, avoiding the downbeats entirely. Others require polyrhythmic skills, such as playing 4 over 3 or 3 over 4, challenging the performer’s rhythmic understanding and execution.

Moreover, the app encourages exploration of rhythmic grouping techniques. Whether through accent patterns—like emphasizing every 5th subdivision—or delta times—such as playing dotted eighth notes—the app fosters development in precision and rhythmic awareness. This comprehensive rhythmic training is invaluable, offering a platform for honing skills that extend beyond traditional musical boundaries.

Stylistic Bias

The possible outputs of the Performer-Thormulator feedback loop are defined by three entities:

- The imagination and ability of the performer

- The core design in the foundation tier

- The specifics of the currently selected mood

Now, because I am simultaneously the performer and the designer of everything, my own musical bias is amplified throughout the feedback loop. This makes it hard for me to identify exactly how much each entity contributes to the combined stylistic flavor.

When I take a step back and listen to Performance 1, I hear a good deal of 70s Miles Davis. Performance 2 sounds more like Jamaican dub music with a slight jazz tinge. In hindsight, this might not be so surprising since both Jamaican dub and in particular Miles Davis have been major musical influences on me. I did not think of Miles or Jamaican dub when designing the system, and the dub flavor of Performance 2 in particular came as a big and welcome surprise to me—being surprised in a musically interesting way is one of the main objectives of Thormulator.

It will be interesting to see what music can be created when new moods altering the ‘musicality’ of the system are developed, somebody else is the performer, and possibly in a more distant future, moods are developed in collaboration with, or by, other people.

Educational Potential

Playing with the app is great ear training. Even though the basslines, chords, and rhythms are generated by the performer, it is easy to lose track of melodic and harmonic changes (which is kind of the point), plus lines and chords can be transposed, rearranged, or mirrored in different ways, adding to the challenge. Regarding rhythm, certain responses require the performer to hit the subdivisions of the current tempo with a certain precision. For instance, to have Thormulator return a drum fill, the performer has to play off-beat in the current subdivision and not hit the downbeats. Other responses require the ability to play 4 over 3 or 3 over 4. Yet another response requires the ability to group subdivisions, either by accents—for instance, an accent on every 5th subdivision—or by delta times, for instance, by playing dotted 8th notes. This is also great training in both precision and general rhythmic awareness.

Moreover, the app encourages exploration of rhythmic grouping techniques. Whether through accent patterns—like emphasizing every 5th subdivision—or delta times—such as playing dotted eighth notes—the app fosters development in precision and rhythmic awareness.

Demonstrations and Future Prospects

Thormulator GUI Demonstrations and Detailed Walkthrough

While Thormulator is not currently available to the public, the following videos and accompanying text provide a comprehensive understanding of how Thormulator works in practice.

Thormulator features various virtual instruments, referred to as “elements” in the GUI and in the videos, each responsible for a type of response. These virtual instruments play sounds through instrument groups which modulate the sound by using FXs and modulators.

Virtual Instruments:

- Play: Plays incoming MIDI in both ‘ambient’ and ‘repeat’ state.

- Drone: Sets, moves, or stops a drone based on the ‘root note’ played. Various gestures adjust the drone’s filter settings.

- BassLine: Active in repeat mode, plays basslines derived from the performer’s notes. Includes octave mapping and transposition capabilities.

- Chord: Generates chords based on scales and root notes. Different modes influence chord playback and muting.

- Chord Rhythm: Plays staccato chords with timing based on the performer’s input. Modes influence chord repetition and muting.

- Shimmer Chord: Continuously arpeggiates a chord in ambient mode and plays when no other chords are present in repeat mode.

- Dynamic Chord: Plays chords relative to the repeat, with new chords overriding old ones.

- Rhythm: Constructs drum patterns based on the bassline’s beats or dynamic subdivisions played by the performer.

Modifiers:

- Gate: Responses only play when the performer is playing.

- Mute: Responses only play when the performer is not playing.

- Rand: Introduces randomness to synth selection.

- Dens: Controls the density of responses, with a chance to mute notes or chords.

Overarching Responses:

- Permutation: Plays repeats in different ways, preserving durations and delta times.

- Mirroring: Toggles mirroring of pitches around an axis, challenging the performer’s ear training.

- Transposition: Transposes pitches in repeat mode based on the root note’s movement.

Instrument Groups:

- Play, Bass, Chord, Rhythm, and Audio In: Each group can use samplers or synths with modulation and audio FXs.

Future Prospects

The developmental trajectory focuses on several key areas: optimizing rule-based algorithms, expanding the mood and response databases, and broadening the sonic palette of the system. By addressing these areas, Thormulator will continue to evolve, offering even more sophisticated and intuitive musical interactions.

Upcoming developments will focus on sound design (new/better-sounding synths and audio FXs) and the development of more moods, which could likely also involve adding new types of responses to the system. The next mood will be developed around the idea of contrast: long ↔ short, sparse ↔ busy, fast ↔ slow. Integrating AI or machine learning algorithms is a possibility for future iterations, which could potentially offer deeper musical understanding and more nuanced responses, further enhancing the system’s adaptability and creativity.

Acknowledgments

Thank You

Eli Fieldsteel for guidance, the SuperCollider community at scsynth.org for answering small and large questions, Bo Madsen for model design and introducing me to ChatGPT, my parents and their spouses Benedicte, Ulla, Ib, and Boje for support and general interest, Michael Støvring and Jeff Jensen for moral support.

Note: This text was assisted by ChatGPT to enhance readability and structure, while maintaining the original content and ideas.

- Email: thor@thormadsen.com

- Subscribe: Thormulator Youtube Channel

- Thor : Facebook | Instagram | Website